ATLAS

The research in high energy physics explores the elementary particles and the fundamental forces in nature with the ATLAS experiment at the CERN Large Hadron Collider (LHC) in Geneva. Currently the main research activity is development of instrumentation, data collection and physics analysis of the proton–proton collision data successively being accumulated by ATLAS.

The first proton-proton collisions at the LHC happened in 2009, and the first physics data came early 2010. Since then, the LHC has completed two data-taking runs: Run-1 (2010-2012) with 7 and 8 TeV collision energies and Run-2 (2015-2018) at 13 TeV. Run-3 started in 2022, with an energy of 13.6 TeV, and will continue until the end of 2025.

With the data collected until now, we are sensitive to a variety of new physics models, and can probe properties of elementary particles and fundamental interactions at a precision previously inaccessible.

In Uppsala we are especially interested in investigating if the Higgs boson is as the Standard Model predicts, and explore its connection with top quarks, dark matter candidates, and other hypothetical new particles.

We are currently preparing for the high-luminosity upgrade of the LHC (HL-LHC) by developing new semiconductor technology for tracking.

The group welcomes new PhD students and postdocs to join its research program at ATLAS. Our research lines are described below.

Higgs boson pair production (HH)

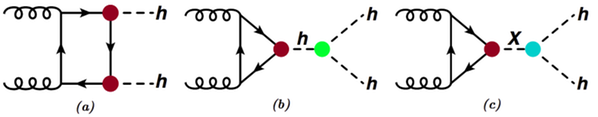

The Standard Model predicts the existence of a scalar particle, the Higgs boson, discovered by ATLAS and CMS at the LHC in 2012. But it also predicts that this particle has the unique feature to couple to itself. For a complete measurement of the so-called Higgs potential and thereby a full validation of the Standard Model, events must be observed, in which two 125 GeV Higgs bosons are produced simultaneously. Besides the Higgs self-coupling (illustrated in Figure b), another process leading to two Higgs bosons is via a box of heavy particles, mostly top quarks (see Figure a).

Unfortunately, these diagrams interfere destructively and the cross-section for Higgs boson pair production at the LHC is very small, three orders of magnitude less than single Higgs boson production. Hence, one does not expect to have evidence for this process before the high luminosity upgrade of LHC (HL-LHC). On the other hand, if Higgs boson pairs were to be observed in the current data or during Run-3 of the LHC, this would indicate new physics. Modifications of the Higgs boson self-coupling with respect to the Standard Model prediction may enhance the production cross-section by modifying the interference pattern. Alternatively, Higgs boson pairs could be produced in the decay of a new resonance, as illustrated in Figure c.

The ATLAS group in Uppsala is pursuing searches for Higgs boson pairs in the final state with two b-quarks and two tau-leptons. With 36 fb–1 of data collected in 2015–2016, this channel provides the most stringent exclusion limit, at about 10 times the Standard Model prediction, among all the individual data analyses performed at the LHC. This search was recently re-interpreted in a scenario where pairs of lepto-quarks are produced, which each decay into a b-quark and a tau-lepton. Our research group also contributes to the statistical combination of ATLAS searches for Higgs boson pairs and to their interpretation in term of the Higgs self-coupling and new physics in the Higgs sector beyond the Standard Model. One of the group members is also the ATLAS convener in the HH sub-group of the LHC Higgs Cross-Section Working Group, in which theoretical models involving Higgs boson pairs are reviewed and recommendations on cross-sections and new benchmarks are gathered.

Contact

Solving the Higgs Fine-tuning problem with Top partners (SHIFT)

The Higgs boson explains why the other elementary particles have masses, but its own mass is an enigma. The theoretical calculation involves quantum corrections which need to cancel at a precision of 1 to 1019 in order to arrive at the measured Higgs boson mass of about 125 GeV (approximately 125 times the mass of the proton). This is called the Higgs fine-tuning problem. Popular proposed solutions include the existence of new heavy particles “top partners”, from supersymmetric models, to stabilise the corrections. The top partners resemble the heaviest known elementary particle, the top quark, hence the name. In Uppsala, we are interested in an alternative approach, namely that the Higgs boson is not an elementary particle but instead composite. This could among other things give rise to top partners in the form of vector like quarks with interesting decay patterns.

The Uppsala team is part of the KAW-funded SHIFT collaboration which also has members from Stockholm University and Chalmers University as well as international collaborators.

Contact

Dark matter searches and long-lived particles

The nature of dark matter is one of the most pressing questions of particle physics. Dark matter is prevalent in the Universe but other than that, we know very little about it. Since dark matter seems to be affected only by gravitational effects, we think it could have mass. And if it has mass, then it could be related to the Higgs boson, which couples to mass.

One of the research lines of the ATLAS group in Uppsala University goes into the Higgs sector to explore it as a portal of new physics at the LHC. One of our recent papers covered the first search for composite dark sector particles (dark mesons) decaying to Standard Model particles through Higgs interaction.

In the absence of clear signs of new physics, subtle experimental signatures take central stage. Long-lived particles (LLPs) are new particles with relatively long lifetimes that travel inside ATLAS some distance before decaying into complicated experimental signatures. Lifetime relates mainly to couplings and mass, and so these new particles are usually light or with feeble couplings, as the kind of particles that could arise from a dark sector connect to dark matter.

Data science methods, such as AI/ML can be crucial to reach the full potential of dark sector searches in ATLAS, and in general any searches for new physics at the LHC and beyond and the group is active in that area as well.

Contact

Past activities on charged Higgs bosons (H+)

In the Standard Model, one complex scalar Higgs doublet is responsible for the electroweak gauge symmetry breaking, giving rise to only one physical Higgs boson. In so-called Two Higgs Doublet Models (2HDM), such as the Minimal Supersymmetric extension of the Standard Model (MSSM), two complex scalar Higgs doublets are responsible for the electroweak symmetry breaking. As a result, the Higgs spectrum consists of five physical states: two charged and three neutral bosons. Until recently, the ATLAS group in Uppsala played a significant role in searching for charged Higgs bosons.

If the charged Higgs boson is lighter than about 170 GeV, it can be produced in the decay of top quarks, which are being copiously pair produced in pp collisions at the LHC. In that case, the main decay is H+→τν, where the tau-lepton may decay either to lighter leptons or hadrons. This decay channel may still have a sizeable branching fraction for heavier charged Higgs bosons, however the dominant decay channel is then H+→tb. Both decay channels have been searched for in ATLAS during the Run-1 and the first half of the Run-2 of LHC, across a broad range of charged Higgs boson masses. Until recently, the group in Uppsala played a significant role in these searches.

Contact

ATLAS trigger performance and monitoring

In the LHC, proton collisions happen every 25 nanosecond when the accelerator is operating at maximum capacity. The results of each collision generate about 1 MB of data in the ATLAS detector. Only a small fraction of the events can be kept from sheer limitation in storage and read-out capacity. The ATLAS trigger is the hardware and software based system which takes a first look at each event and decides if it is potentially interesting and should be stored or if it should be deleted forever.

Trigger data quality monitoring

The ATLAS detector does not only collect data, it should collect the best data. During data-taking it is crucial to continuously monitor the data quality so that problems can be immediately corrected. Otherwise the data collected might be biased or bad in some other way.

At Uppsala University, we are involved in the monitoring of the tau trigger data quality including the maintenance of the software as well as the overall data quality monitoring from a trigger point of view.

Contact

The luminosity upgrade of the LHC to a High Luminosity LHC (HL-LHC)

LHC is preparing to increase its luminosity by an order of magnitude in the middle of next decade. The aim is to discover new physics processes and to study known interactions with high precision. This requires a total rebuild of the ATLAS experiment. The most important rebuild is the replacement of the tracker.

Inner TracKer (ITK)

The tracker is the detector system in ATLAS closest to the point where the protons collide.

The particles created in the proton–proton collisions travel through the tracker. Charged particles deposit small signals in the detector layers of the tracker while passing through. The signal is collected using the tracker’s millions of readout channels. This information can be used to make out the path of the particle. Since the tracker is placed in a 2 T magnetic field, the path will be curved and will allow us to measure the track’s momentum. The spatial resolution of the tracker’s detecting elements is high, and it is possible to identify the decay vertices of a particle with high 3D precision. This allows us to identify particles by their lifetime.

The high interaction rate in ATLAS generates intense radiation that damages the detector elements. A few years into the next decade the radiation damage is already severe and degrades the performance of the tracker. For this reason we are now preparing for the replacement of the current tracker. Uppsala together with our Scandinavian collaborators in Lund, Copenhagen and Oslo are setting up a production line for new silicon strip detector modules that will be more radiation hard, faster and better performing than the predecessors.

In order to use modern production facilities we collaborate with a Swedish electronics industry. Initial small scale R&D is done in-house, targeting a production method that can be ported to industry. Specialized interconnection methods, quality assurance and control will be done in academia.

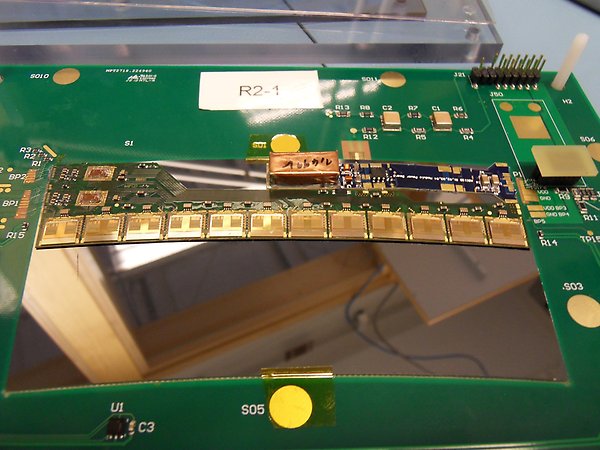

A detector module for the End-Cap strip part of ITK.

Following the module presented in the figure, the module consists of a large segmented silicon sensor wire bonded to 12 Application Specific Integrated Circuits (ASIC). They read out the minimal signal from the sensor and buffer the information until receiving a trigger(order) to send the data to the Data Acquisition System (DAC). There are two small ASICs in the module to take care of the data transfer between readout ASICs and the DAC. All ASICs are mounted on a low weight, high density flexible Printed Circuit Board (PCB). The final ITK will have a large number of detector modules of various sizes depending on the position in the tracker. Because of the size and complexity of the tracker the detector modules will be made in an international collaboration between Europe, America and Asia. The Scandinavian production line is focused on two module types.

Contact

Myrto Asimakopoulou

Hardware Tracking for the Trigger

A major drawback of a high granularity tracker is the massive amount of data recorded. We are today having a serious bottleneck in the bandwidth for transferring that data from the tracker to the DAQ. In current data recording we can only read out tracking information for 1 physics event in 400. The data bandwidth is however not the only problem. If all tracking data would be read out then we would still not have the capacity to reconstruct that data.

The first problem could easily be solved by adding more services for data transfer. The price would be a significant increase in material in the tracker which would not only degrade the physics performance but also increase the radiation level. The solution is therefore to develop an intelligent data reduction scheme on the detector.

ATLAS is developing a two level data reduction scheme called Hardware Tracking for the Trigger that will make it possible to read out 1 event in 10. However, this requires a massive increase in processing capacity for data reconstruction. Furthermore, reconstruction will need to be done within a few microseconds allowing the majority of the data to be buffered in the ITK (the maximum buffering capacity in the readout ASICs of ITK is a few microseconds).

The only way to achieve high throughput, low latency computing is by hardware accelerated processing. We are currently working on hardware acceleration using Field Programmable Gate Arrays (FPGA).

We are investigating with the WADAPT collaboration the use of high frequency wireless transmission to increase the readout bandwidth from the tracker without increasing material from services. Short range links can also open for interlayer communication in the tracker which may be a powerful method to reduce data volume even further using topological intelligence. The goal is to be able in the future to read out all physics events in the tracker. This would be attractive in particular for Dark Matter searches and new exotic physics.

Contact

Mikael Mårtenson

Track triggers for long-lived particles (LPP)

Most of new physics searches at the LHC target potential new particles that decay very quickly after they are produced. This fact has driven the design of the detectors and the filters set in place to select potentially interesting events for further processing.

However, there are many plausible new physics models that include metastable or stable new particles, with long decay lengths, that is “long-lived” particles (LLP). In particular, LLP appear as Dark Matter candidates or as new particles linked to a Dark Sector potentially accessible at the LHC. Due to the current event selection and reconstruction, we may be missing those signals.

Tracking information could be used to select events with displaced tracks or vertices, or to tag events with “disappearing tracks”.

In the ATLAS group of Uppsala University we are tying together our interest in Dark Matter searches and our expertise in track triggers to design future mechanisms to identify LLPs already at trigger level in the HL-LHC.

Contact

Infrastructure and support

Lots of work is needed to keep the ATLAS detector and the surrounding infrastructure going. The following projects are highly important support functions for the research.

Computing GRID

The development of a distributed computing GRID has been essential for the success of the LHC program. The development has been the path finder for distributed computing now available in commercial clouds and is still the flagship for data processing in sciences. The GRID engine developed by the Nordic countries called ARC is widely recognized and used in computing infrastructures around the world. ARC is undergoing continuous development. We are currently working on improving the support for submitting computing jobs requiring mixed CPU-GPU architectures.

Contact

Fake taus

Many searches for new physics and measurements of Standard Model processes in ATLAS have signatures with hadronically decaying tau-leptons, which are reconstructed as a narrow jet with one or three associated tracks. Multi-variate techniques are employed to distinguish such tau objects from quark- or gluon-initiated jets, however there is always a sizeable background for misidentified taus in ATLAS, and simulation is usually not reliable to estimate it. A number of data-driven background estimation techniques have been employed instead. The main challenge of such methods is that quarks and gluons have different probabilities of faking taus, hence it is crucial to estimate the fractions of quark- and gluon-initiated jets in the data sample used in any given analysis prior to computing the fake-tau background. Our group in Uppsala plays a leading role in the ATLAS Fake Tau Task Force (FTTF), created in 2018 to provide central recommendations on data-driven estimation of fake taus, and on their associated systematic uncertainties.

Contact

Contact

- Faculty members working on ATLAS:

- Elin Bergeås Kuutmann

- Richard Brenner

- Arnaud Ferrari

- Rebeca Gonzalez Suarez